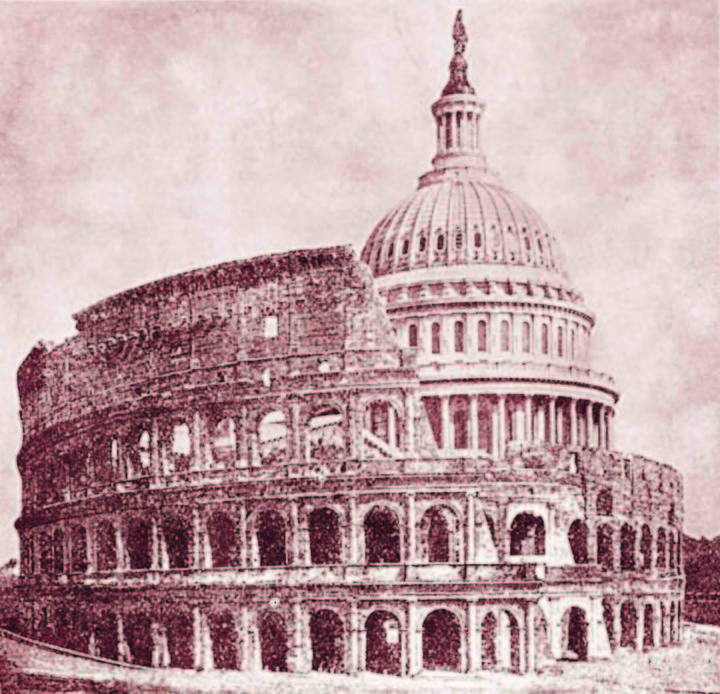

This Week in Viewpoints: from Ancient Rome to her modern parallel.

As usual, I have scrounged up three of the best exemplars of what I’ve been reading this week, for your mental delectation. With the end of the semester fast approaching, every Week in Viewpoints leaves me just a little bit sadder, given that I will soon be moving on to greener pastures—although surely not to better readers.

The first selection appeared in the online magazine Aeon. Walter Scheidel, Stanford professor, lays out the case for: “How the fall of the Roman empire paved the road to modernity.”

Comparisons between Rome and America are old-hat, and they are usually prompted by apocalyptic speculation in the vein of “if America collapsed, it would be as bad as the fall of the Roman Empire.” Scheidel argues that, actually, such an outcome would be pretty good. The thesis of his argument is this: the collapse of Rome allowed pluralism to flourish in Europe, and because of pluralism, Europe built the modern world. Obviously Scheidel understands that the collapse of a nation is bad:

It’s true that Rome’s collapse reverberated widely, at least in the western – mostly European – half of its empire. (A shrinking portion of the eastern half, later known as Byzantium, survived for another millennium.) Although some regions were harder hit than others, none escaped unscathed. Monumental structures fell into disrepair; previously thriving cities emptied out; Rome itself turned into a shadow of its former grand self, with shepherds tending their flocks among the ruins. Trade and coin use thinned out, and the art of writing retreated. Population numbers plummeted.

However, as feudalism rose and fell:

Councils of royal advisers matured into early parliaments. Bringing together nobles and senior clergymen as well as representatives of cities and entire regions, these bodies came to hold the purse strings, compelling kings to negotiate over tax levies. So many different power structures intersected and overlapped, and fragmentation was so pervasive that no one side could ever claim the upper hand; locked into unceasing competition, all these groups had to bargain and compromise to get anything done. Power became constitutionalised, openly negotiable and formally partible; bargaining took place out in the open and followed established rules. However much kings liked to claim divine favour, their hands were often tied – and if they pushed too hard, neighbouring countries were ready to support disgruntled defectors.

This deeply entrenched pluralism turned out to be crucial once states became more centralised, which happened when population growth and economic growth triggered wars that strengthened kings. Yet different countries followed different trajectories. Some rulers managed to tighten the reins, leading toward the absolutism of the French Sun King Louis XIV; in other cases, the nobility called the shots. Sometimes parliaments held their own against ambitious sovereigns, and sometimes there were no kings at all and republics prevailed. The details hardly matter: what does is that all of this unfolded side by side. The educated knew that there was no single immutable order, and they were able to weigh the upsides and drawbacks of different ways of organising society.

This leading to strong nation-states, global colonialism and the birth and proliferation of capitalism, but also:

This wasn’t the only way in which western Europe proved uniquely exceptional. It was there that modernity took off – the Enlightenment, the Industrial Revolution, modern science and technology, and representative democracy, coupled with colonialism, stark racism and unprecedented environmental degradation.

Was that a coincidence? Historians, economists and political scientists have long argued about the causes of these transformative developments. Even as some theories have fallen by the wayside, from God’s will to white supremacy, there’s no shortage of competing explanations. The debate has turned into a minefield, as scholars who seek to understand why this particular bundle of changes appeared only in one part of the world wrestle with a heavy baggage of stereotypes and prejudices that threaten to cloud our judgment. But, as it turns out, there’s a shortcut. Almost without fail, all these different arguments have one thing in common. They’re deeply rooted in the fact that, after Rome fell, Europe was intensely fragmented, both between and within different countries. Pluralism is the common denominator.

This is opposed to the other technologically-advanced, sophisticated society on the other side of the Eurasian landmass: China.

Nothing like this happened anywhere else in the world. The resilience of empire as a form of political organisation made sure of that. Wherever geography and ecology allowed large imperial structures to take root, they tended to persist: as empires fell, others took their place. China is the most prominent example. Ever since the first emperor of Qin (he of terracotta-army fame) united the warring states in the late 3rd century BCE, monopoly power became the norm. Whenever dynasties failed and the state splintered, new dynasties emerged and rebuilt the empire. Over time, as such interludes grew shorter, imperial unity came to be seen as ineluctable, as the natural order of things, celebrated by elites and sustained by the ethnic and cultural homogenisation imposed on the populace.

According to Scheidel, the hot-button social issues of yesterday and today: colonialism, slavery, racism and exploitative capitalism were also features of European pluralism, and European nations raced to have colonial possessions “more often than not” to keep their rivals from having them. (To be clear, Scheidel thinks these are unambiguous evils. It’s a descriptive not a prescriptive claim). It’s an interesting theory, in essence, that fragmentation and competition is what allowed Europe, uniquely, to thrive. It stands opposed to other theories, like those of Jared Diamond of Guns, Germs, and Steel fame who posited that it was Europe’s geography that gave them the civilizational leg-up. Personally, I find it a touch too Darwinian and also ignores the ways that Europeans were often radically similar and worked in concord throughout history.

Whatever you think of the theory, it’s a different way to look at the Fall of Rome, that misty calamity that, to this day, serves as the focal point for study of how the ancient world gave way to our own.

Next, Annie Lowrey at The Atlantic makes an impassioned argument contra establishment wisdom on low-wage workers. In “Low Skilled Workers Aren’t a Problem to Be Fixed,” she argues against the tendency for policy makers on the right and left to treat people like line cooks and delivery drivers as a drag on the US economy.

Being a prep cook is hard, low-wage, and essential work, as the past year has so horribly proved. It is also a “low-skill” job held by “low-skill workers,” at least in the eyes of many policy makers and business leaders, who argue that the American workforce has a “skills gap” or “skills mismatch” problem that has been exacerbated by the pandemic. Millions need to “upskill” to compete in the 21st century, or so say The New York Times and the Boston Consulting Group, among others.

Those are ubiquitous arguments in elite policy conversations. They are also deeply problematic. The issue is in part semantic: The term low-skill as we use it is often derogatory, a socially sanctioned slur Davos types casually lob at millions of American workers, disproportionately Black and Latino, immigrant, and low-income workers. Describing American workers as low-skill also vaults over the discrimination that creates these “low-skill” jobs and pushes certain workers to them. And it positions American workers as being the problem, rather than American labor standards, racism and sexism, and social and educational infrastructure. It is a cancerous little phrase, low-skill. As the pandemic ends and the economy reopens, we need to leave it behind.

While I don’t think it’s helpful or necessary to connect the problem of “low-skill” work to a nexus of every social evil like racism or sexism, I stand wholly behind Lowrey’s main premise. The managerial elite (the “Davos types”) are not happy that millions of Americans either can’t or won’t take on enormous amounts of private debt so they can fit like good little cogs into the new information economy that they didn’t want or ask for but was foisted upon them by the same “Davos types.”

This is a problem that, as Lowrey notes, transcends administrations both Democrat and Republican. During the pandemic, we treated “low-skill” workers as “essential,” realizing that we needed their labor to keep society functioning, but after the pandemic we are back to stigmatizing them. As Lowrey states it:

This description, like so many descriptions of “low-skill workers,” is abjectly offensive, both patronizing and demeaning. Imagine going up to a person who’s stocking shelves in a grocery store and telling him that he is low-skill and holding the economy back. Imagine seeing a group of nannies and blasting “Learn to code!” at them as life advice. The low-skill label flattens workers to a single attribute, ignoring the capacities they have and devaluing the work they do. It pathologizes them, portraying low-skill workers as a problem to be fixed, My Fair Lady–style.

A society that exalts high-prestige work to the detriment of all else will make itself economically unhealthy, especially when not only prestige but a decent livelihood are attached to these jobs which, by their very nature, not everyone can do. One symptom of that is the two-income trap and low birthrates, something that Matt Purple at the American Conservative addressed in a recent column called “Pro-Life in the Fourth Trimester.”

As Purple tells it, America’s obsession with work (and the aforementioned two-income trap), combined with the high cost of childcare and the need of newborns for their parents leads to one conclusion: America needs to join the rest of the world in guaranteeing paid maternity leave. He lays out a conservative case.

Infants need their parents, and Purple draws from this:

All of which raises a question: In a world obsessed with work and rat races and networking happy hours, how do we make sure newborns get the attention they deserve?

The answer is that at least mothers need to be able to stay home, and for that to happen, women who work need maternity leave. Alas the United States is something of an anomaly here. We’re the only first-world country on earth that offers no federal guarantee of time off for early motherhood. Under the Family and Medical Leave Act of 1993, parents can take 12 weeks of unpaid leave so long as they work for the government or a company with 50 employees or more. And plenty of businesses do have their own policies; the Bureau of Labor Statistics estimates that 89 percent of civilian workers have access to unpaid maternity leave.

A lucky few do get access to paid maternity leave, but:

But many moms and dads aren’t nearly so fortunate. This leaves them either scraping pennies to make sure at least one of them can stay home or going the in loco parentis route, putting their babies at the mercy of America’s deeply unaffordable and impersonal childcare system. Now contrast that to the rest of the world. Women in the United Kingdom can take a full year of maternity leave if they wish, with the first six weeks paid at 90 percent of their average weekly earnings and a lesser amount available afterward. Supposedly work-obsessed Japan offers 14 weeks at two thirds pay, six of which are required by law.

I’m not advocating we go full Estonia, which provides 18 months worth of fully paid parental leave (do women ever get hired over there?). And I’m well aware there’s an argument to be made for leaving this to the states and private businesses, both of which have been trending towards more generous maternity policies as it is. Another interesting idea comes by way of Senator Marco Rubio, who has suggested letting new parents dip into their Social Security funds to help finance their leave.

Purple tries to put this new proposed spending in the context of all the ways in which the American government wastes billions of dollars hand over fist:

All I’m saying is that the United States is a country that in the coming decades will spend $1.7 trillion on a garbage stealth fighter that doesn’t work. We blew $2 trillion to wrest Afghanistan away from the Taliban and then place it back into the hands of the Taliban. We’re on the hook for untold trillions to foot the retirements of the Baby Boomers. I’m as averse to creating a new entitlement as the next blackhearted right-winger, but it seems to me that smoothing that fourth trimester ought to be at least as much of a priority as launching drone strikes at the same spots of Yemeni sand over and over again. That’s true however we ultimately decide to get the money.

Other first-world nations are in far better fiscal shape than we are, yet still manage to do more by their new mothers. And by all means, don’t subsidize my baby’s care by borrowing from his future. If we go the federal route, offset whatever costs are accrued from other programs, beginning with that bloated military hardware budget. But give parents the peace of mind they need during those stressful and formative days. Nothing could be more important. It shouldn’t be that one in four employed American mothers is already going back to work within two weeks of having her child, as was the case according to a 2012 study.

I think most Americans can agree that more bonding time for mothers and newborns is worth incalculable sums more than, as Purple puts it, “launching drone strikes at the same spots of Yemeni sand over and over.” Perhaps “babies not bombs” should be the rallying cry of the new conservative movement post-Trump.

At any rate, that’s the Week in Viewpoints. Best of luck to all Skyhawks on finals!

Image Credit / The American Conservative